Sensor Fusion

Nov 24 2021

Sensor Fusion refers to the process of combining measurements from different sources to ensure that resulting information has lesser uncertainty as compared to any of the individual measurements. As an example, we can calculate depth information from 2-D images by combining data from 2 cameras at slightly different locations.

The different sources for the information we obtain need not be identical. There are three types of fusion methods:

- Direct Fusion: Fusion of data from a set of either heterogenous or homogenous set of sensors along with past history of sensor data

- Indirect Fusion: Along with sources in direct fusion, we also use sources like a priori knowledge about the information, and human input.

- Combination of both: We can also obtain information by combining the outputs of the above 2 methods of fusion

Classification of Sensor Fusion Algorithms

Sensor Fusion algorithms can be classified on different parameters:

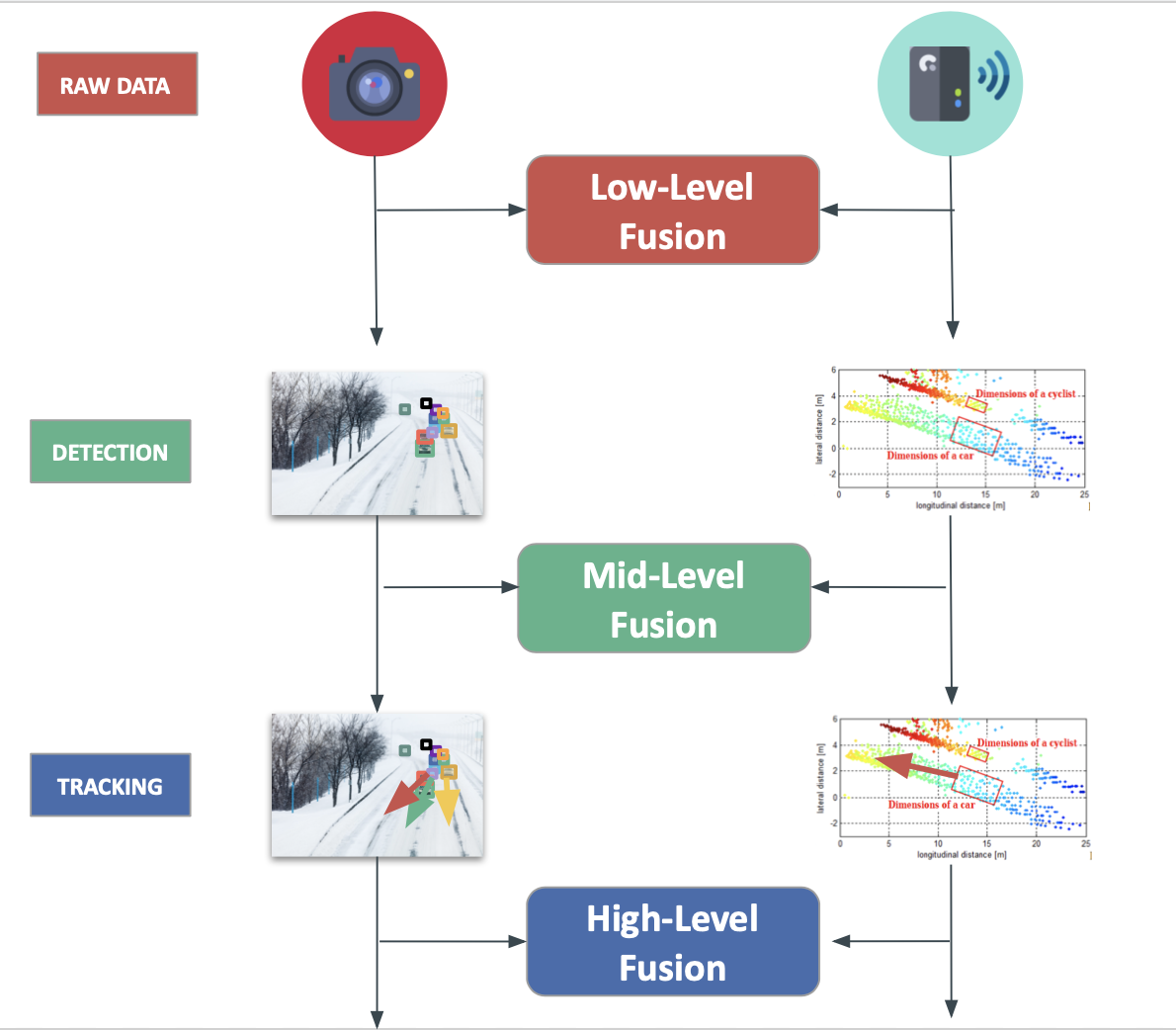

On the basis of Abstraction Level (When?)

- Low Level Fusion: Fusing the raw data coming in from different sensors

- Mid Level Fusion: Fusing the detections from each sensor

- High Level Fusion: Fusing the trajectories (predictions) of each sensor

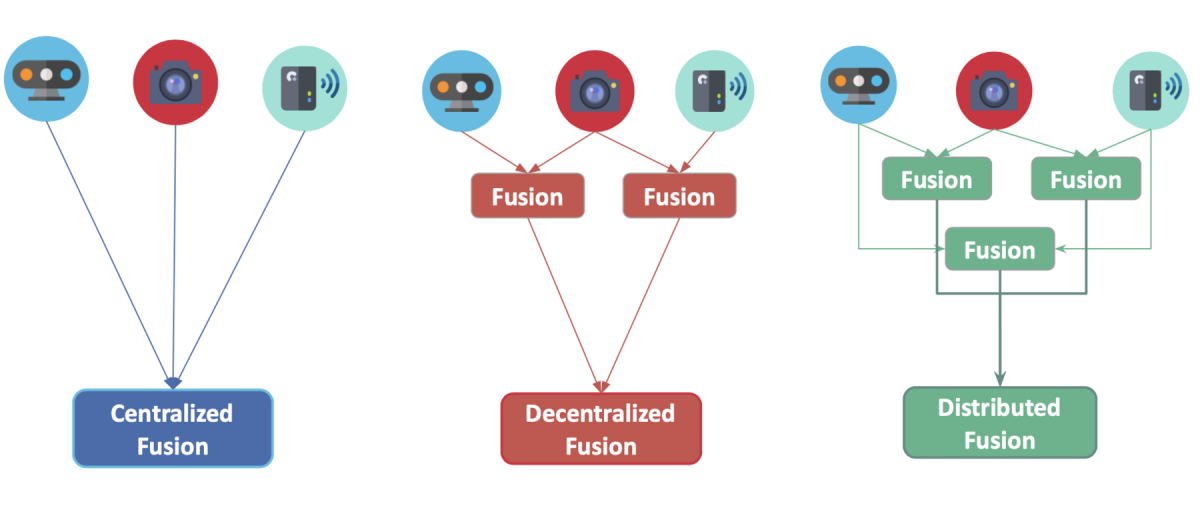

On the basis of Centralization Level (Where?)

- Centralized: A single central unit deals with the fusion

- Decentralized: Each sensor fuses the data and sends it onto the next one

- Distributed: Each sensor fuses data locally and sends it to the next unit

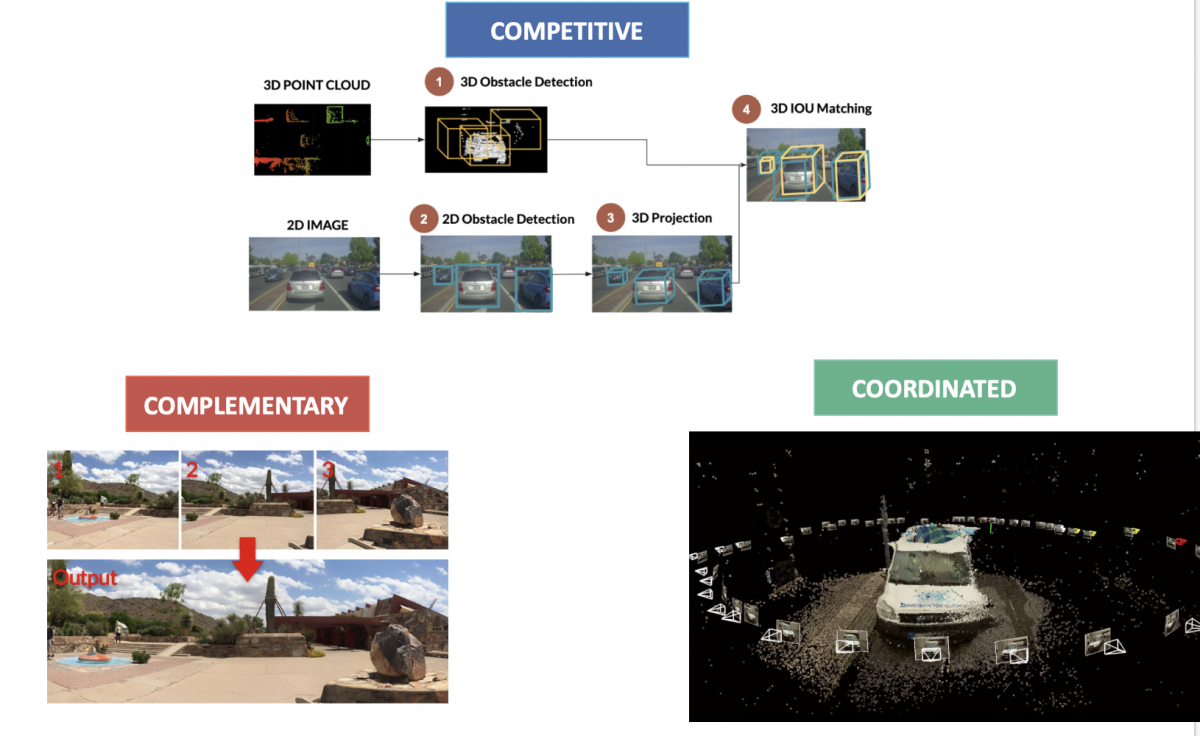

On the basis of Composition Level (What?)

- Competitive Fusion: When different sensors are meant for the same purpose

- Complementary Fusion: When different sensors are used to look at different scenes to obtain data that couldn’t have been obtained had the been used individually

- Coordinated Fusion: Using multiple sensors to produce a new scene, but looking at the same object. E.g. 3D reconstruction

For more details regarding the types of sensor fusion, check here.

Example Calculation Regarding Sensor Fusion

For a basic example showing how two measurements can be combined, check this section.

Algorithms on Sensor Fusion

Based on Sensor Fusion

- The central limit theorem states that when we take a large number of measurements of a parameter, the distribution of their mean tends to a normal distribution, and the mean of the distribution gets closer to the true mean as number of measurements increase.

- In order to see its relation to sensor fusion, assume we have two different sensors A and B. The more samples we take of their readings, the more closely the distribution of the sample averages will resemble a bell curve and thus approach the set’s true average value. The closer we approach an accurate average value, the less noise will factor into sensor fusion algorithms.

- For more information regarding the central limit theorem, check here.

Based on Kalman Filter

- A Kalman filter is an algorithm that estimates unknown values by taking data inputs from multiple sources, despite possibly having a high amount of signal noise.

- It has the advantage of predicting unknown values more accurately by combining measurements than what is obtained on using the measurements individually.

- The Kalman filter is a recursive algorithm that depends only on the previous state of the system and the current observed sensor data to estimate the current state of the system.

- For more details regarding the Kalman filter, check here or here.

Based on Bayesian Networks

- Bayes Rule in probability is the backbone of state update equations used for sensor fusion. Bayesian Networks based on Bayes rule predicts the likelihood that any given measurement is a contributing factor in determining a given parameter.

- For a detailed study of Bayesian Networks, check here.

- Some of the algorithms used for Sensor Fusion based on Bayesian networks are K2, hill climbing, simulated annealing.

The Dempster-Shafer Theory

- This theory, called the theory of belief functions or the evidence theory, is a general framework for working with uncertainties and measurements.

- Dempster–Shafer theory is based on two ideas: obtaining degrees of belief for one question from subjective probabilities for a related question, and Dempster's rule for combining such degrees of belief when they are based on independent items of evidence.

- For more details on this theory, check here or here.

Convolutional Neural Networks

- Convolutional neural network based methods can simultaneously process many channels of sensor data. From this fusion of such data, they produce classification results based on image recognition.

- For a detailed study of CNNs, check here.

Conclusion

Sensor Fusion is a vast field, with a huge number of algorithms to combine sensor data to obtain measurements. The mathematics behind sensor fusion is often complicated and requires a good understanding of concepts of probability. The goal of this article was to give a brief overview of different types of sensor fusion and to give a bird’s eye view of the various algorithms that can be used for sensor fusion.